By Sarah T. Roberts

Sticks and stones may break my bones, but hate on social media morphs into real life violence. Indeed, it is well known that the hate motivated murderers of Black, Jewish and LGBTQ Americans in recent years began their descent into extremism on social media. Just a few weeks ago, LGBTQ ally Lauri Carleton was shot and killed after a dispute over a Pride flag outside of her store. The pinned tweet on the suspected killer’s Twitter/X profile was a burning Pride flag and he regularly retweeted content that falsely attacked LGBTQ people as ‘groomers,’ though such egregious anti-LGBTQ hate content is supposedly not allowed on X/Twitter.

Not only does hate speech on social media hurt community members when it is targeted at them directly or spotted by them while scrolling, but it fuels offline discrimination and violence. Social media platforms, including X (formerly Twitter), have developed — and claim to continue to maintain — their hate speech policies for a reason: to protect all users from being targeted by or exposed to content that diminishes their safety or that negatively impacts the usability of the platform.

Importantly, hate speech policies are also designed to meet the needs of advertisers, who wisely don’t want their brands associated with the steady flow of misogynistic, antisemitic, Islamophobic, racist, xenophobic, and anti-LGBTQ posts that now flood the platform.

As part of their policy development — to protect users and advertisers (which is to say, their own basic business interests) — Twitter and all major social media companies have historically solicited the input of civil society groups, including GLAAD, as external stakeholders to provide guidance to keep policies and products safe. This valued process of stakeholder input is one of the established best practices in the field of social media trust and safety. Such requested guidance in the past included the input of Twitter’s voluntary Trust & Safety Council, of which GLAAD and other colleagues, including the ADL, were members and which was unceremoniously dissolved by the company’s new owner back in the fall of 2022, leaving a profound vacuum which all manner of abusive material quickly and predictably filled.

X’s subsequent policy replacement for genuine trust and safety, deemed ‘freedom of speech, not freedom of reach’ is quite simply a field day for extremists. Some of the most well-known perpetrators of hate speech now earn tens of thousands of dollars under the company’s new ad revenue-sharing program. Not only does X profit from hate, now the creators of that hate speech do as well, and the monetary incentive to continue to circulate this material is now as literal as cutting a check.

Generating blatant disinformation (lies) intended to propagate hate, fear, and dangerous conspiracy theories demonizing members of historically marginalized groups is a familiar strategy for consolidating power and distracting from other real issues. X’s decreasing interest in enforcing their own policies, and their retraction of previous LGBTQ policy protections, resulted in a record-low score of 33% in GLAAD’s 2023 Social Media Safety Index report in July. (I serve on the advisory committee of GLAAD’s Social Media Safety Program, the organization’s platform accountability initiative).

Thankfully, the anti-LGBTQ atmosphere of X is not in alignment with the basic values of most Americans. In GLAAD’s 2023 Accelerating Acceptance report, a super-majority of 91% of non-LGBTQ Americans expressed that LGBTQ people should have the freedom to live our lives and not be discriminated against. In that same survey, 86% of respondents agree that exposure to online hate content leads to real-world violence. A 2022 report from GLAAD, UltraViolet, Women’s March, and Kairos showed that a majority of Americans report seeing online threats of violence based on race, gender or sexuality and said they also experience harm by witnessing harassment against their communities, even when the posts aren’t about them individually. All of which begs the question, just who does X imagine its product is therefore intended to serve? And which brands would find this environment a palatable one in which to advertise their products, much less to even be associated with?

On October 27, the one-year change-of-ownership anniversary, Twitter’s hateful conduct policy still includes the standard list of protected categories (“You may not attack other people on the basis of race, ethnicity, national origin, caste, sexual orientation, gender, gender identity, religious affiliation, age, disability, or serious disease.”) The policy also still claims: “We prohibit behavior that targets individuals or groups with abuse based on their perceived membership in a protected category.”

X’s CEO Linda Yaccarino claims that hate speech is under control on the platform, but, since the company changed ownership last fall and Yaccarino joined as CEO, ADL and Center for Countering Digital Hate documented more antisemitic speech, Color of Change documented more racist speech, and an audit by Center for Countering Digital Hate and HRC found that Twitter failed to act on 99% of the 100 hateful tweets reported by researchers after it had stated ‘grooming’ slurs were against its policies.

Indeed, X’s own employment practices belie any claimed commitment to enforcing their own rules. Due to the decimation of their Trust and Safety teams, and the ongoing behavior of X’s owner in targeting “individuals and groups with abuse based on their perceived membership in a protected category,” it’s not surprising that both users and advertisers now experience the platform as neither trustworthy nor safe.

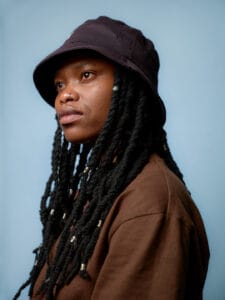

Sarah T. Roberts is a UCLA Associate Professor of Information Studies who specializes in social media content moderation. She is an expert in the areas of internet culture, social media, digital labor, and the intersections of media and technology.