Executive Summary, Key Conclusions and Recommendations, and Methodology

“When social media platforms use algorithms that amplify hate, fail to enforce their own policies against hate, and profit off the targeting of communities, people suffer — and democracy is undermined.”

— The Leadership Conference on Civil and Human Rights[1]

In addition to the annual Platform Scorecard ratings and recommendations, this year’s Social Media Safety Index (SMSI) report provides an overview of the current state of LGBTQ social media safety, privacy, and expression, including sections on: the economy of hate and disinformation, predominant anti-LGBTQ tropes, policy best practices, suppression of LGBTQ content, the connections between online hate and offline harm, regulation and oversight, AI, data protection and privacy, and more.

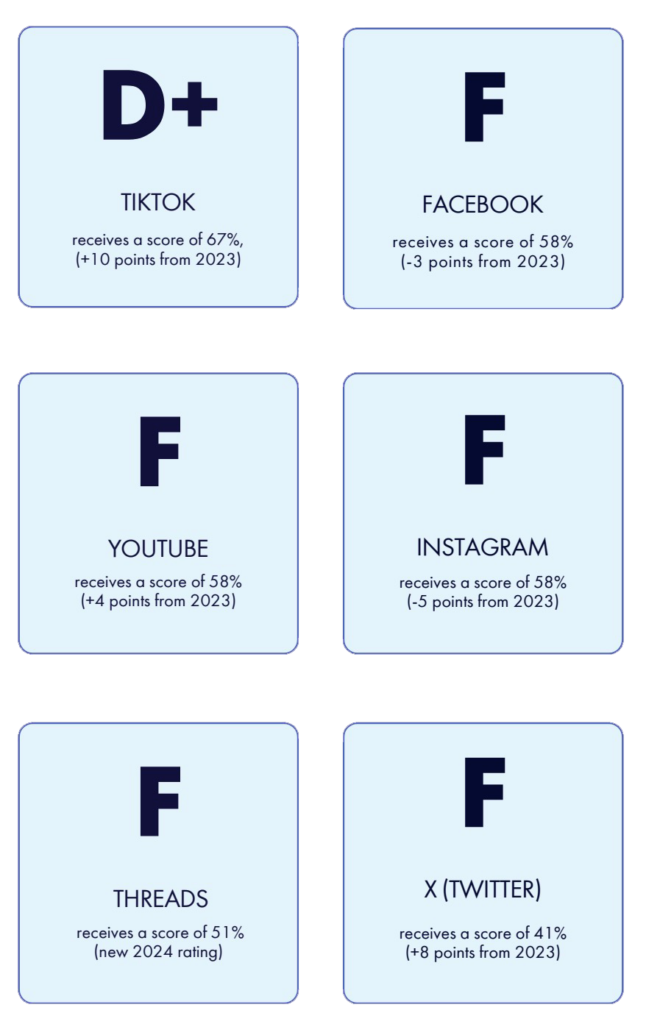

In the 2024 SMSI Platform Scorecard, while some platforms have shown improvements in their scores and others have fallen, overall the scores remain abysmal, with all platforms other than TikTok receiving F grades (TikTok reached a D+).

An Important Note About The Scorecard Ratings: While the six platforms all have policies prohibiting hate and harassment on the basis of sexual orientation, gender identity and/or expression, and other protected characteristics, the SMSI Scorecard does not include indicators to rate them on enforcement of those policies. GLAAD and other monitoring organizations repeatedly encounter failures in enforcement of community guidelines across major platforms. However, given the difficulty involved in assessing enforcement methodologically — which is further complicated by a relative lack of transparency from the companies — these failures are not quantified in the scores below.

Specific LGBTQ safety, privacy, and expression issues identified in the Platform Scorecard, and in the SMSI report in general, include: inadequate content moderation and problems with policy development and enforcement (including issues with both failure to mitigate anti-LGBTQ content and over-moderation/suppression of LGBTQ users); harmful algorithms and lack of algorithmic transparency; inadequate transparency and user controls around data privacy; and an overall lack of transparency and accountability across the industry, among many other issues — all of which disproportionately impact LGBTQ users and other marginalized communities who are uniquely vulnerable to hate, harassment, and discrimination.

These areas of concern are exacerbated for those who are members of multiple communities, including people of color, women, immigrants, people with disabilities, religious minorities, and more. Social media platforms should be safe for everyone, in all of who we are.

Like the 2023 Social Media Safety Index (SMSI), this year’s report illuminates the epidemic of anti-LGBTQ hate, harassment, and disinformation across the major social media platforms: TikTok, X, YouTube, and Meta’s Instagram, Facebook, and newly added Threads. The report especially makes note of the high-follower hate accounts and right-wing figures who continue to manufacture and circulate most of this activity.[2] The devastating impact of hate, disinformation, and conspiracy theory content continues to be one of the most consequential issues of our time, with hate-driven and politically-motivated false narratives running rampant online and offline and causing real-world harm to our collective public health, safety, and democracy. As a major January 2024 Online Extremism report from the U.S. Government Accountability Office (GAO) notes: “Research suggests the occurrence of hate crimes is associated with hate speech on the internet [and] suggests individuals radicalized on the internet can perpetrate violence as lone offenders.”

Targeting historically marginalized groups, including LGBTQ people, with fear-mongering, lies, and bigotry is both an intentional strategy of bad actors for attempting to consolidate political power, as well as being a lucrative enterprise (for the right-wing figures and groups that drive such campaigns and tropes, and for the multi-billion dollar tech companies that host them). It’s clear that regulatory oversight of the entire industry is necessary to address, as the Global Disinformation Index puts it: “the perverse incentives that drive the corruption of our information environment.”[3]

In addition to egregious levels of inadequately moderated anti-LGBTQ material across platforms (for example see GLAAD’s recent report, Unsafe: Meta Fails to Moderate Extreme Anti-trans Hate Across Facebook, Instagram, and Threads), we also see the corollary problem of over-moderation of legitimate LGBTQ expression — including wrongful takedowns of LGBTQ accounts and creators,[4] mis-labeling of LGBTQ content as “adult,” unwarranted demonetization of LGBTQ material under such policies, shadowbanning,[5] and similar suppression of LGBTQ content.[6] Meta’s recent policy change limiting algorithmic eligibility of so-called “political content” (partly defined by Meta as: “social topics that affect a group of people and/or society large”) is especially concerning.[7]

There’s nothing unusual or surprising about the fact that companies prioritize their corporate profits and bottom line over public safety and the best interests of society (which is why we have regulatory agencies to oversee major industries). Unfortunately social media companies are currently woefully under-regulated. And platform safety concerns have risen, particularly over the past year, as so many of the world’s largest social media companies have slashed content moderation teams. In an NBC News feature about the wave of layoffs an anonymous X staffer reflected that, “hateful conduct and potentially violent conduct has really increased.”[8] Such downsizing is also negatively impacting the fight against disinformation on social media platforms, as outlined at length in the 2023 Center for Democracy and Technology report Seismic Shifts.[9] The impacts of these business decisions on our information environment will no doubt continue to worsen as major social media companies have shifted towards policies allowing highly-consequential, known false information and hate-motivated extremism to proliferate on their platforms.[10]