As the world’s largest LGBTQ media advocacy organization and as leading experts in LGBTQ tech accountability, GLAAD’s Social Media Safety program provides ongoing key stakeholder guidance with regard to LGBTQ safety, privacy, and expression to social media platforms, including Meta’s Facebook, Instagram, and Threads. In addition to the following specific guidance to the Oversight Board on the “Explicit AI Images of Female Public Figures” cases (case numbers: 2024-007-IG-UA, 2024-008-FB-UA) we urge the Oversight Board to refer to the 2023 edition of our annual Social Media Safety Index report for additional context.

This public comment from GLAAD is specifically addressing the Board’s request for input on: “The nature and gravity of harms posed by deepfake pornography including how those harms affect women, especially women who are public figures.”

The use of deepfake technology to create malicious imagery intended to bully, harass, and demean women (especially women who are public figures) is extremely serious, and violates Meta’s existing bullying and harassment policies, which include an array of protections (e.g. “Everyone is protected from: … Severe sexualized commentary. Derogatory sexualized photoshop or drawings.”[1]). It is vitally important that such imagery be clearly interpreted and categorized as malicious, identified as violative by Meta’s moderation systems, and mitigated accordingly. From GLAAD’s years of experience with the company’s convoluted interpretations of its own policies, it is easy to anticipate that the word “derogatory” in the policy is likely to be used by the company as an opportunity to not enforce the policy. To be clear, such maliciously manufactured sexualized deepfake content is inherently, by definition, derogatory.

Also relevant here is the concept of “malign creativity,” as noted in GLAAD’s public comment for the Oversight Board’s September 2023 Post in Polish Targeting Trans People case (2023-023-FB-UA): “Hate content, seeded over time to foster dehumanizing narratives in politics and society, often violates Meta’s Community Guidelines but uses disingenuous rhetoric (including satire and humor) to circumvent safeguards.[2] Meta is failing to adequately confront the issues of ‘malign creativity’ that allow for unmitigated hate speech as bad actors adapt to moderation policies in a dynamic process.[3]”

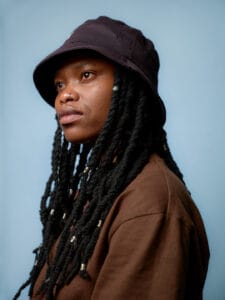

Prior to the proliferation of current deepfake technology, such methods of targeting women (especially women of color, and especially Black women, and especially public figures) have been well-established as extremely harmful forms of bullying and harassment. As noted in the 2023 Glitch Digital Misogynoir Report: “Digital misogynoir is the continued, unchecked, and often violent dehumanisation of Black women on social media, as well as through other forms such as algorithmic discrimination. Digital misogynoir is particularly dangerous because of its ability to incite offline violence. For example, after spending time on far-right social platforms, white supremacist Dylann Roof went on to murder nine Black church members, seven of whom were women, while they were at bible study. In the UK, misogynoir has recently been prominent in the sustained and targeted harassment of Meghan Markle in the tabloid press and online.”[4]

The Problem of Public Figures Loopholes and Self-Reporting Requirements

Meta’s public figure loophole in several of its policies continues to harm not only the public figures who remain unprotected by Meta’s policies; these loopholes (in which Meta allows egregious hate content to remain unmitigated on their platforms) also harm members of the protected characteristic (PC) groups who share the identities of those targeted. In this instance, deepfake pornography targets all women, girls, and femme-identified people. A 2022 report commissioned by UltraViolet, GLAAD, Kairos, and Women’s March shows that women, people of color, and LGBTQ people experience higher levels of harassment and threats of violence on social media than other users, and also found that attacks on the basis of identity harm others who share those identities (46% of women feel personally attacked from witnessing harassment against women who are public figures). “This means, substantively speaking, that the problem of online harassment is not only one that affects the victims of harassment themselves, but the witnesses of the harassment.”[5]

Currently Meta’s Tier 3 Bullying and Harassment policy is phrased in such a way that public figures are specifically excluded from these protections: “When self-reported, private minors, private adults, and minor involuntary public figures are protected from the following: … Unwanted manipulated imagery.”[6]

Meta should update this policy and protect public figures from unwanted manipulated imagery. Further, many of these policies require (prohibitively burdensome) self-reporting in order for the company to evaluate such content for mitigation. For example, Meta’s bullying and harassment policy page concludes with a list of items for which “we require additional information or context to enforce.” This begins with the following relevant policy (which requires self-reporting): “Post content sexualizing a public figure. We will remove this content when we have confirmation from the target or an authorized representative of the target that the content is unwanted.”[7]

This is a common feature of how Meta’s policies are constructed — they feature layers of requirements (especially public figure loopholes and self-reporting requirements) that result in many policies simply being effectively unenforced due to the requirements. For example, Meta’s policy that relates to targeted misgendering (which is explained in further detail here) is effectively diluted by these same requirements.

With this status-quo policy framing, Meta facilitates enormous quantities of harmful misogynist content that plagues Instagram, Facebook, and Threads, and sets a standard that normalizes contempt and hatred of women.[8]

GLAAD has had the repeated experience of engaging with Meta’s trust and safety teams and hearing back an incoherent reasoning in which the company seems strangely determined in as many instances as possible to render their own policies inapplicable. The company’s goal seems to be, as much as possible, to lean towards loopholes and reasoning that allows harmful content to remain unmitigated, rather than to apply and enforce policies to protect users from harm.

The Need for Agile Common Sense Policy Development and Enforcement

It is crucially important that platforms such as Meta recognize that bad actors will continue to manufacture content, tropes, and vehicles of hate, harassment, and disinformation that intentionally try to muddy the waters and confuse platforms about their hate-driven nature. Such disingenuous maliciousness must be seen for what it is — cleverness meant to evade community guidelines and hate speech policy violations. Meta (and other companies) have policy development and enforcement teams for this very reason.

A case in point of how it is possible to recognize such malicious creative content for what it is, generate a policy to address it, and then enforce the policy — is Meta’s development of its Holocaust denial policy. This policy was implemented in October 2020, after years of guidance and input from advocacy groups (and subsequent to CEO Mark Zuckerberg’s July 2018 statement in an interview with journalist Kara Swisher about Holocaust denial content that “at the end of the day, I don’t believe that our platform should take that down”). While it took years for Facebook to finally adopt the policy to recognize such content as hate speech, it was always — from day one — entirely clear that Holocaust denial is a form of antisemitism. It is just a creative form of it. Any reasonable person can look at such material from a good-faith perspective and see the malicious intent and harmful effects.

Similarly, intentional targeted misgendering and deadnaming of public figures is anti-trans hate speech, and possesses all of the hallmark qualities of hate speech, and yet some platforms including Meta continue to resist expressly characterizing it as such. (To be clear, this is about targeted intentional instances of promoting anti-trans animus, not about accidentally getting someone’s pronouns wrong.)

Like previous Oversight Board cases (the Post in Polish Targeting Trans People case, the Altered Video of President Biden case and others), malicious deepfake pornography content is highly consequential and dangerous, and is causing real-world harms to the specific people who are targeted and to other women, girls, and femme-identified people, as well as contributing to a general pollution of our information ecosystem with toxic content.

It is also important to note that a considerable amount of such content (bullying and harassing people using false, sexualized depictions) is often manufactured and amplified by high-follower hate accounts. These accounts are motivated not only by animus towards historically marginalized groups (targeting people on the basis of their protected characteristics is prohibited by Meta’s community guidelines), but also by financial incentives. Maximizing engagement and generating revenue (via increasingly toxic, false, and hateful content) is a significant motivation for the perpetuation of such harmful, dangerous, dehumanizing material.

In conclusion, we reiterate that this specific kind of weaponized speech — false and malicious sexualized depictions of women — is a dangerous and prevalent form of hate, harassment, and bullying and has been for many years now. Meta is well aware of all of this (hence the existence of its own policies). Meta should urgently and meaningfully interpret and enforce these policies to effectively mitigate such material, while also prioritizing the equally important need to not suppress or censor legitimate content and accounts.

About the GLAAD Social Media Safety Program

As the leading national LGBTQ media advocacy organization GLAAD is working every day to hold tech companies and social media platforms accountable, and to secure safe online spaces for LGBTQ people. The GLAAD Social Media Safety (SMS) program researches, monitors, and reports on a variety of issues facing LGBTQ social media users — with a focus on safety, privacy, and expression. The SMS program has consulted directly with platforms and tech companies on some of the most significant LGBTQ policy and product developments over the years. In addition to ongoing advocacy work with platforms (including TikTok, X/Twitter, YouTube, and Meta’s Facebook, Instagram, Threads, and others), and issuing the highly-respected annual Social Media Safety Index (SMSI) report, the SMS program produces resources, guides, publications, and campaigns, and actively works to educate the general public and raise awareness in the media about LGBTQ social media safety issues, especially anti-LGBTQ hate and disinformation.

[1] Bullying and Harassment | Transparency Center, Meta

[2] United Nations, Report: Online hate increasing against minorities (March 2021); Harel, Tal Orian, et al. “The Normalization of Hatred: Identity, Affective Polarization, and Dehumanization on Facebook in the Context of Intractable Political Conflict.” Social Media + Society, vol. 6, no. 2, Apr. 2020, p. 205630512091398.

[3] Malign Creativity: How Gender, Sex, and Lies are Weaponized Against Women Online, Wilson Center.

[4] The Digital Misogynoir Report, Glitch.

[5] From URL to IRL: The Impact of social Media on People of Color, Women, and LGBTQ+ Communities, Ultraviolet, GLAAD, Kairos, Women’s March.

[6] Bullying and Harassment | Transparency Center, Meta

[7] Bullying and Harassment | Transparency Center, Meta

[8] Social media ‘bombarding’ boys with misogynist content, RTE