Improving Policies, Creating Accountability, and Raising Awareness: The Year in GLAAD Social Media Safety Program Advocacy Work (2023-2024)

GLAAD’s Social Media Safety (SMS) program actively researches, monitors, and reports on a variety of issues facing LGBTQ social media users — with a focus on safety, privacy and expression. The SMS program has consulted directly with platforms and tech companies on some of the most significant LGBTQ policy and product developments over the years. In addition to ongoing advocacy work with platforms (including TikTok, X/Twitter, YouTube, and Meta’s Facebook, Instagram, Threads, and others), and issuing the highly-respected annual Social Media Safety Index (SMSI) report, the SMS program produces resources, guides, publications, and campaigns, and actively works to educate the general public and raise awareness in the media about LGBTQ social media safety issues, especially anti-LGBTQ hate and disinformation.

Below are just a few highlights of the program’s advocacy work, tackling some of the most urgent problems and issues in the field over the past year.

In April 2024, GLAAD implemented an Instagram PSA campaign to raise awareness about the alarming implications of Meta’s new “political content” policy, as well as launching an open letter advocacy campaign with Accountable Tech and more than 200 Instagram creators. Meta’s trio of problematic policy moves included: the decision to characterize “social topics that affect a group of people and/or society large” as “political content,” the corresponding decision to no longer algorithmically recommend such content to users, and the equally concerning implementation of the new policy — changing all user settings to limit such content by default, rather than making it an opt-in option. Speaking out to The Washington Post, a GLAAD spokesperson explained, “Categorizing ‘social topics that affect a group of people and/or society large’ as ‘political content’ is an appalling move. LGBTQ people’s lives are simply that, our lives. Our lives are not ‘political content’ or political fodder. This is a dangerous move that not only suppresses LGBTQ voices, but decimates opportunities for LGBTQ people to connect with each other, and allies, as our content will be excluded from the algorithm.”

Released in March 2024, GLAAD’s impactful report — Unsafe: Meta Fails to Moderate Extreme Anti-trans Hate Across Facebook, Instagram, and Threads — showcases dozens of examples of the shocking, dehumanizing anti-trans content that Meta actively allows on its platforms. All of the content examples in the report were submitted to Meta’s standard reporting systems by GLAAD; Meta either replied that posts were not violative or simply did not take action on them. The Washington Post broke the news with an exclusive feature, highlighting several of the content examples (“In one Instagram post cited in the report,” the Post story notes, “a trans person’s body is shown twisted on the ground while being beaten to death with stones, which have been replaced with the laughing emoji. The caption reads: ‘[trans flag] people are devils.’”) The Post also notes that Meta’s ombudsman Oversight Board had previously “lambasted the company’s failure to enforce rules against anti-trans hate and threats.” Although multiple major media outlets requested comments from Meta on the report — including the Washington Post, Fast Company, Engadget, The Daily Dot, and The Verge — the company did not respond. In the aftermath of the report, seven of the 30 reported items were removed, though no explanation was given by Meta (23 of the egregiously harmful items remain live).

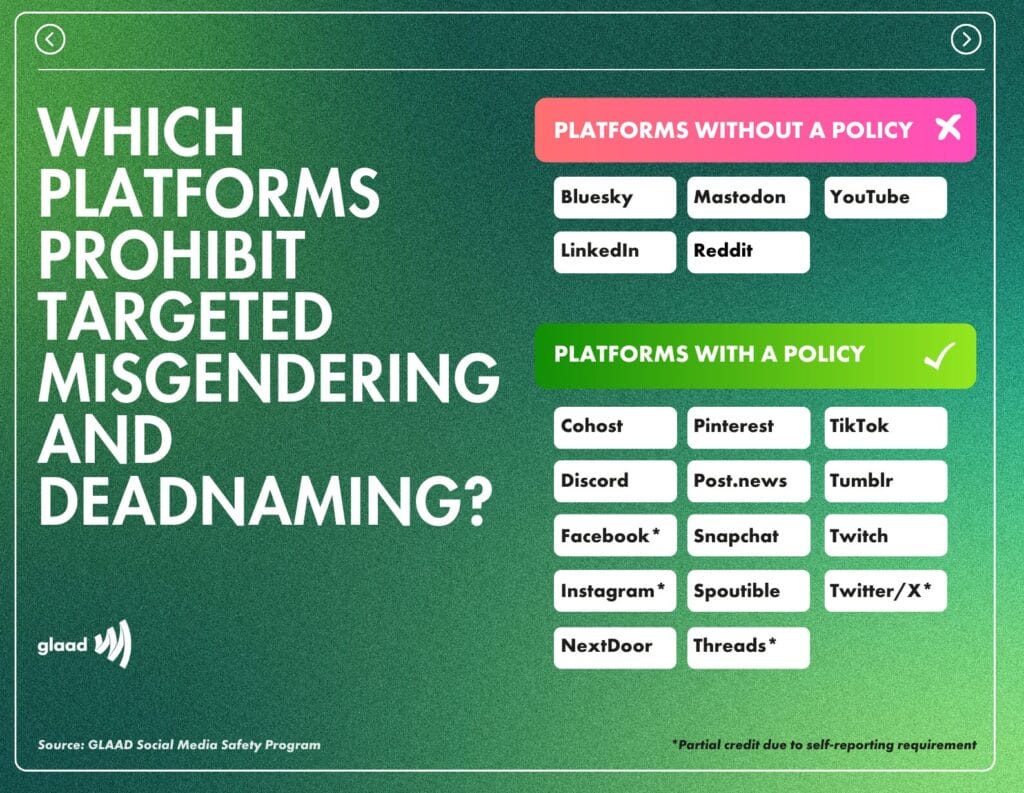

The SMS program saw major victories over the past year in our advocacy work around two key LGBTQ safety policy protections — prohibiting targeted misgendering and deadnaming and prohibiting the promotion and advertising of harmful so-called “conversion therapy.” After consultation with GLAAD, companies adopting one or both of the policies in recent months include: Snapchat, Discord, Post, Spoutible, Grindr, IFTAS (the non-profit supporting the Fediverse moderator community), and Mastodon.social (Mastodon’s largest server). While these additions do not solve the extremely significant related issue of policy enforcement (which GLAAD is working on as well), these are excellent concrete examples of how the SMS program creates impact. In February 2024 GLAAD released two significant reports documenting how some platforms lag behind in these LGBTQ safety policy protections, but that numerous platforms and apps are increasingly adopting the policies that GLAAD’s SMS program advocates as best practices for the industry.

Educating the general public and raising awareness in the media about LGBTQ social media safety issues is another important part of GLAAD’s Social Media Safety program work. In January 2024 SMS program manager Leanna Garfield joined MSNBC contributor Katelyn Burns on her podcast “Cancel Me Daddy” to discuss how social media companies like Meta and Twitter are profiting from online hate speech against LGBTQ people, especially transgender people, and how GLAAD has been at the forefront of pushing these companies to work toward solutions. Listen to the episode here.

Having previously submitted an official September 2023 public comment to the important “Post in Polish Targeting Trans People” case, the SMS program was heartened by the January 2024 ruling from the Oversight Board — the body that makes non-binding but precedent-setting rulings about Meta content moderation cases — overturning Meta’s original repeated decisions to not take down a Facebook post targeting transgender people with violent speech, despite more than a dozen requests from community members. The post was an egregious example of anti-trans hate advocating for transgender people to commit suicide, featuring an image of a striped curtain in the blue, pink and white colors of the transgender flag with a text overlay in Polish saying: “New technology. Curtains that hang themselves.” The post was only removed after the Oversight Board alerted Meta. The case illuminates systemic failures with the company’s moderation practices, including widespread failure to enforce their own policies (as noted both by the Oversight Board and GLAAD). Following Meta’s response to the ruling in March 2024, the SMS program issued an additional statement condemning Meta’s continuing negligence and ongoing failure to moderate anti-trans hate. GLAAD’s advocacy work on this is ongoing.

In October 2023, on the one-year anniversary of Elon Musk’s takeover of X/Twitter, GLAAD worked with SMSI advisory committee member Sarah T. Roberts (UCLA Associate Professor of Information Studies) to publish a reflection on the plummeting state of the platform: “X is Neither Trusted Nor Safe.” Roberts noted that: “Generating blatant disinformation (lies) intended to propagate hate, fear, and dangerous conspiracy theories demonizing members of historically marginalized groups is a familiar strategy for consolidating power and distracting from other real issues. X’s decreasing interest in enforcing their own policies, and their retraction of previous LGBTQ policy protections, resulted in a record-low score of 33% in GLAAD’s 2023 Social Media Safety Index report in July.” X’s platform safety has steadily declined — with ongoing anti-LGBTQ (and especially anti-trans) behavior, rhetoric, and activity running rampant (much of it spearheaded by Musk). GLAAD continues to be a member of Stop Toxic Twitter, a coalition of 60+ civil rights and civil society groups calling on X advertisers to demand a safer platform for their brands and for users. 75 out of the top 100 U.S advertisers have now ceased their ad spending on X/Twitter according to Sensor Tower, and traffic has steadily declined (down 30% according to a study by Edison Research). Musk’s ongoing personal boosting of hate and extremism content and targeting of civil society groups, journalists, and commentators remains gravely concerning.

Working together with GLAAD’s Spanish-Language & Latine Media program, September 2023 saw the launch of Nos Mantenemos Seguros: Guía de seguridad digital LGBTQ, a new Spanish translation of our valuable resource: We Keep Us Safe — Guide to Anti-LGBTQ Online Hate and Disinformation. For LGBTQ activists and organizations, journalists and public figures, and for anyone who spends time online, the guide includes tips and easy best practices everyone can implement to improve their digital safety.

In June 2023, GLAAD and the Human Rights Campaign facilitated an open letter from more than 250 LGBTQ celebrities, public figures, and allies urging social media companies to address the epidemic of anti-transgender hate on their platforms. The list of signatories on the letter included such high-profile names as Elliot Page, Laverne Cox, Jamie Lee Curtis, Shawn Mendes, Janelle Monáe, Gabrielle Union, Judd Apatow, Ariana Grande, Jonathan Van Ness, and more. The letter received extensive national media coverage highlighting the plea for platforms to urgently create and share plans for addressing: Content that spreads malicious lies and disinformation about healthcare for transgender youth; accounts and postings that perpetuate anti-LGBTQ extremist hate and disinformation (including the anti-LGBTQ “groomer” trope), in violation of platform policies; dehumanizing, hateful attacks on prominent transgender public figures and influencers; and anti-transgender hate speech, including targeted misgendering, deadnaming, and hate-driven tropes.